The Future of Data Experience: From Clunky Gearshifts to Sentient Co-Pilots.

August 18, 2025

The Future of Data Experience: From Clunky Gearshifts to Sentient Co-Pilots

Recently, I was given the rather daunting task of presenting my personal vision for the future of data to a distinguished panel of experts — people who have forgotten more about storage than I’ll ever know. In a moment of either bravery or foolishness, I decided to collect and refine those thoughts here. So, before we dive in, a crucial disclaimer: what follows is purely my opinion. This is my personal crystal ball at work; a collection of predictions and possibilities shaped by my experiences. It is not, by any means, an official company strategy or product roadmap. It’s simply one person’s perspective on where this incredible industry is headed.

I still have flashbacks to my early days in tech—the frigid data centers, the deafening roar of the fans, and the sheer manual labor of racking and stacking servers. We treated storage like a beast of burden: a necessary, but cumbersome, part of the infrastructure we had to provision, manage, and tame. Our primary job was to keep the lights on and the disks spinning. The “Data Experience,” or DX, was simply the desperate hope that nothing broke during the night.

Fast forward to today, and that world feels like a distant memory. The conversation has completely changed, driven by an inescapable force: the relentless data deluge. We are creating data at a pace that is outstripping our ability to manage it with traditional tools and mindsets. The old model of buying bigger boxes and hiring more people to manage them is not just inefficient; it’s unsustainable.

We are on the cusp of a radical transformation, one that redefines our relationship with data entirely. It’s a journey from a clunky manual transmission, where every action required direct, laborious human intervention, to a sleek, fully autonomous vehicle that anticipates our needs. (Now, for the record, as someone who personally loves the feeling of control you get from driving a manual car, this is purely a technological analogy!) The future isn’t just about storing more data; it’s about making that data intelligent, portable, and ridiculously easy to manage. It’s about elevating the Data Experience.

To understand where we’re going, let’s look at the roadmap for the next 5, 10, and 15 years. This isn’t just a story about technology; it’s a story about the evolution of intelligence itself.

The Next 5 Years (2025-2030): The Era of the Self-Driving Data Center

The changes coming in the next five years aren’t science fiction; they are the logical and necessary maturation of trends already in motion. This is the era where infrastructure finally gets out of its own way, moving from a reactive to a proactive state. This is where your storage admin finally gets to take their hands off the wheel and focus on the road ahead.

Autonomous Operations Become Table Stakes

For decades, IT operations have been defined by firefighting. An alert fires, an engineer investigates, a problem is remediated. The promise of AI Ops is to break this cycle for good. In the next five years, storage platforms will become truly self-driving. Powered by sophisticated machine learning models, they will constantly analyze trillions of telemetry points to predict potential issues before they impact performance. They’ll perform root-cause analysis in seconds, not hours, and automatically optimize workloads, rebalance data, and manage capacity without human intervention. This shift will fundamentally change the role of the infrastructure engineer, freeing them from mundane operational tasks to focus on higher-value initiatives like application modernization and data strategy.

The Rise of “Storage as Code”

The wall between developers and infrastructure is being dismantled, brick by brick. The “as-a-Service” model, popularized by the cloud, is becoming the default expectation for on-premises infrastructure. This is the heart of the “Storage as Code” movement. Gone are the days of filing a ticket and waiting two weeks for a LUN to be provisioned. Instead, developers will define their storage requirements—capacity, performance tiers, data protection policies—directly within their application’s code or CI/CD pipelines. With a simple API call, the intelligent infrastructure will instantly provision and configure the necessary resources. This creates a frictionless experience that accelerates development cycles and treats storage not as a siloed function, but as a fluid, integrated component of the software development lifecycle.

The Unstoppable Shift to Subscription Models

The financial model is evolving alongside the technology. The massive, upfront capital expenditures of traditional hardware purchases are giving way to flexible, pay-as-you-go subscription models. This isn’t just an accounting trick; it’s a strategic advantage. “Storage-as-a-Service” provides an evergreen platform, where the hardware and software are continuously and non-disruptively refreshed behind the scenes. This eliminates the dreaded forklift upgrade, kills the multi-year migration project, and ensures businesses are never running on aging technology. The data center budget will look less like a mortgage and more like a utility bill—predictable, scalable, and always current.

The Next 10 Years (2030-2035): The Thinking Data Center

As we approach 2035, the evolution takes a significant leap. The infrastructure stops being a passive recipient of commands and starts becoming an active participant in data processing. The line between storage, compute, and AI will blur into non-existence. The car won’t just drive itself; it will analyze traffic, weather, and your calendar to suggest a better route or even a different destination entirely.

Data-Aware Infrastructure: From Dumb Pipes to Smart Libraries

For too long, storage has been a “dumb” container. It knew a file existed, but had no idea what it was. That is about to change dramatically. Future storage systems will be “data-aware,” meaning they will natively understand and classify data at the moment of ingestion. By parsing metadata and even content, the system will know if it’s storing genomic sequences, financial transactions, video streams, or sensor logs. This enables a new level of intelligence. The system can automatically apply the correct data protection, security, and governance policies without human intervention. It’s the difference between a warehouse that just stacks boxes and a sentient library that has read every book and can offer you profound insights and connections.

The Convergence of Compute and Storage

Data has gravity. As datasets swell into the petabytes and exabytes, moving them to a separate compute cluster for processing becomes slow, costly, and a security risk. The logical solution is to flip the model: move the compute to the data. In this next decade, storage arrays will become high-performance data processing hubs. They will integrate accelerators like GPUs, DPUs, TPUs and FPGAs directly into the chassis. This will allow organizations to perform real-time analytics, AI/ML model training, and complex data transformations directly where the data lives, slashing latency and unlocking new possibilities for discovery.

Realizing the Dream of Universal Data Portability

The hybrid cloud is the reality for nearly every enterprise, but true workload portability remains a challenge. The next era will solve this with the emergence of a universal data plane. Through a combination of standardized data formats, a global metadata layer, and powerful abstraction software, the boundaries between on-premises data centers and public clouds (AWS, Azure, GCP) will dissolve. Data will move fluidly and securely to wherever it’s needed most, without costly and complex refactoring. This finally delivers on the promise of the cloud: run any workload, anywhere, without vendor lock-in.

The 15+ Year Horizon (2035-2040): The Sentient Data Center

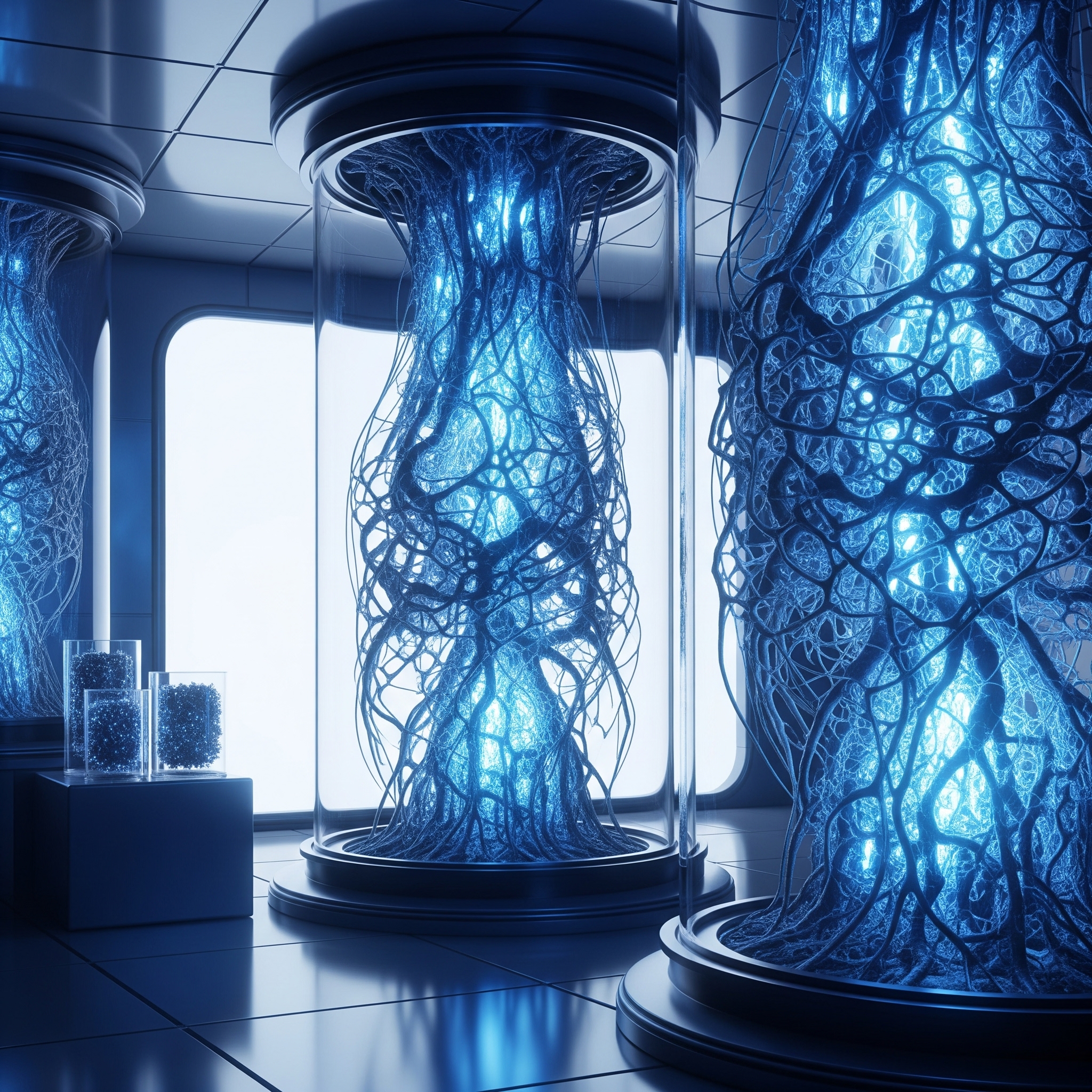

Now, we look further out, to the edge of what’s currently imaginable. If the last stage was about an intelligent system, this final stage is about a sentient one. Here, the infrastructure transcends its role as a tool and becomes a true partner, evolving and even creating in ways we are just beginning to comprehend. I really wanted to use an image from the “Matrix” movie, but didn’t want to infringe on any copywrights here. Just imagine the “digital twins”, i.e. Mr. Smith…

Self-Evolving Architectures: The System That Improves Itself

This is a concept that pushes the boundaries of AI. We are not just talking about a system that learns from data to optimize itself; we are talking about a system that can fundamentally rewrite its own core logic. Based on deep analysis of long-term workload patterns, a self-evolving storage architecture could design and deploy a new, more efficient data layout, or develop a novel compression algorithm tailored perfectly to the data it holds. It’s a technological parallel to biological evolution, where successful adaptations are encoded and passed on, allowing the system to become better, faster, and smarter over time, entirely on its own.

Bio-Integrated Storage: The Future is Organic

The limitations of silicon will eventually be reached. To accommodate the exponential growth of archival data, we will turn to the most efficient storage medium known to science: DNA. With a storage density millions of times greater than flash memory, all of the world’s digital data could theoretically be stored in a single coffee cup. DNA is also incredibly stable, capable of preserving information for thousands of years with minimal energy. While read/write speeds will initially limit its use cases, DNA and other bio-integrated technologies represent the ultimate long-term solution for our “write-once, read-rarely” cold data archives. Storing corporate financial records might one day have more in common with a petri dish than a platter.

The Emergence of the Ethics Engine

When a system is this autonomous and intelligent, a new question arises: how do we ensure its decisions are not just efficient, but also right? As infrastructure takes on the role of making decisions about data placement, access, and even deletion, a robust governance layer becomes critical. This is the “Ethics Engine.” It will be a sophisticated rules engine that goes beyond performance metrics to enforce policies based on corporate governance, data privacy laws (like GDPR), and even moral and ethical guidelines. It will be the system’s conscience, ensuring that as our technology becomes more powerful, it remains aligned with our human values.

Conclusion: From a Bigger Box to a Smarter Partner

The evolution of data storage is one of the most compelling narratives in technology. We are on a remarkable journey from a passive container to an active, intelligent, and ultimately indispensable partner in our data strategy. We are finally moving away from the grunt work of managing boxes and focusing on what truly matters: the Data Experience.

The ultimate goal of this technological march—from automated to intelligent to sentient—is not just to build clever machines. It is to unlock the full potential of human knowledge, to make data a seamless, powerful, and accessible asset for discovery and progress. The next 15 years will be a wild ride. Better buckle up.

Final Thought

In the next 15 years, we’re not managing infrastructure. We’re curating experiences.

And the organizations that understand this shift — that treat data not as a burden but as a living, intelligent asset — will define the next era of innovation.

Storage is dead. Long live the data experience.